December 28, 2025

The Moment Systems Stop Explaining Themselves

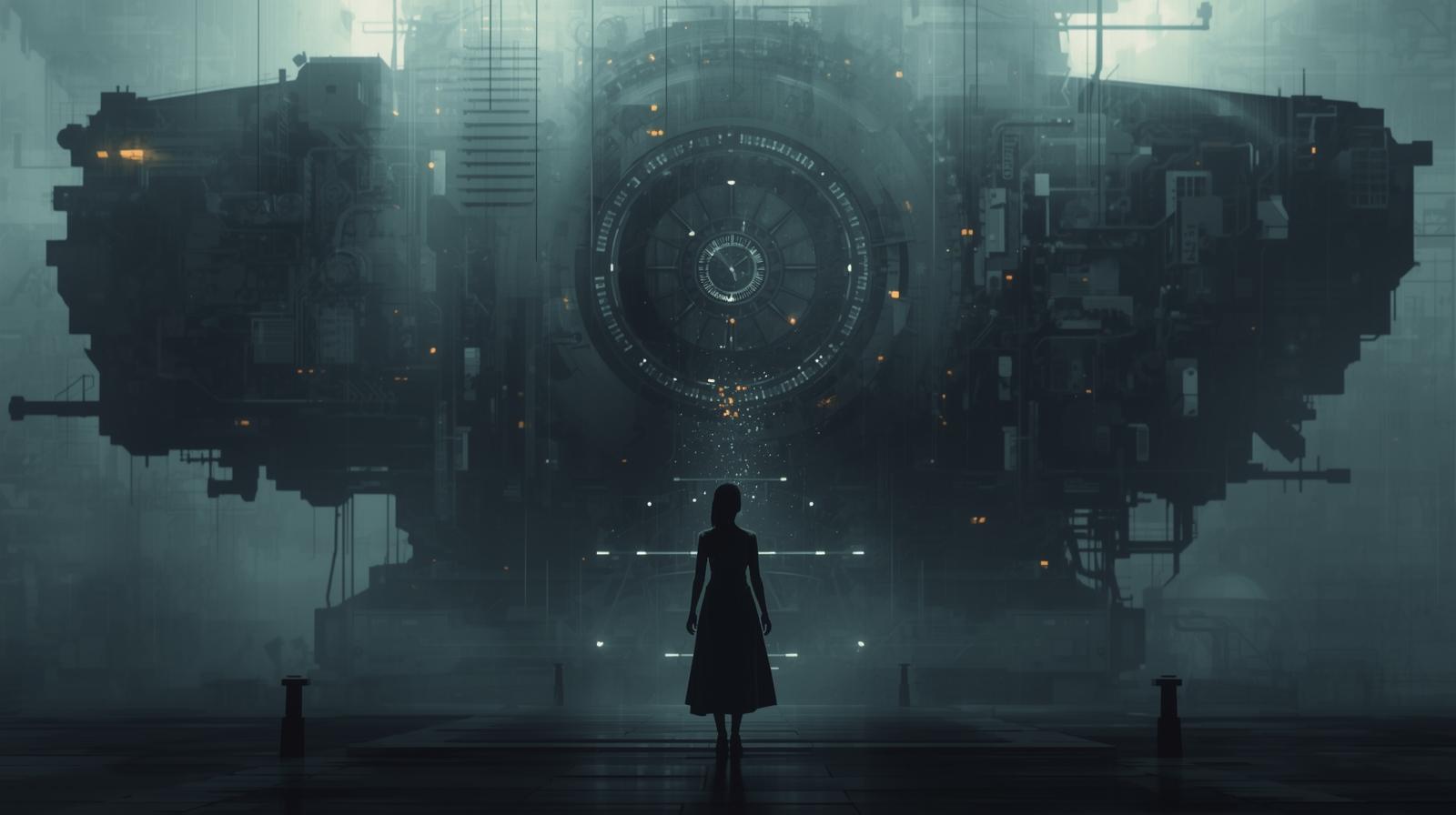

There is a moment that arrives quietly, without announcement, when you realize a system can no longer tell you why it did what it did.

Nothing is obviously broken. The interface still works. The outcome still arrives on time. The decision even looks reasonable. But when you ask why, there is no answer that makes sense in human terms. Only confidence. Only probability. Only the assurance that the system is functioning as intended.

That moment matters more than we admit.

For most of modern history, trust in systems was built on explanation. If something went wrong, someone could trace it. A person could be questioned. A rule could be pointed to. Even when outcomes were unfair, responsibility had a shape.

That shape is dissolving.

We are entering an era where systems do not fail loudly. They succeed quietly. They deliver results that are statistically sound and emotionally alien. When challenged, they do not argue. They simply repeat that the outcome is correct.

This is not a technical failure. It is a shift in authority.

When explanation disappears, trust does not vanish immediately. It thins. People adapt. They stop asking why and start asking how to avoid triggering the system again. They learn the edges. They internalize rules that were never clearly stated.

Compliance replaces understanding.

The language around these systems insists this is progress. We are told that complexity is unavoidable. That intelligence requires opacity. That no single human could understand the whole, and that this is a fair trade for efficiency and accuracy.

But this language hides something important.

When a system cannot explain itself, it also cannot be held in the same way. Accountability requires narrative. Responsibility requires causality that can be spoken. Without that, blame spreads thinly until it disappears.

No one decided. The system emerged.

This is where trust begins to fracture.

Not because systems are malicious, but because they become final without being answerable. Appeals exist, but they feel ceremonial. Corrections happen, but too late to matter. Explanations are offered, but they describe process, not reason.

People sense this gap intuitively. They feel judged by something that cannot be reasoned with. Over time, they stop expecting fairness. They expect inevitability.

That expectation changes behavior more than any rule ever could.

What makes this moment different from past technological shifts is not scale, but causality. Previous systems were complicated, but legible. You could follow the chain from input to outcome. You could disagree, protest, or push back.

Today’s systems increasingly operate through layers of inference that resist linear explanation. Outcomes are produced, but their origins are probabilistic, distributed, and buried inside models no one person can fully interpret.

When causality becomes opaque, moral judgment struggles to attach.

This has subtle consequences.

Human judgment begins to feel secondary. Intuition is reframed as bias. Challenge is framed as resistance to progress. Over time, people learn to defer not because the system is right, but because arguing feels pointless.

Authority shifts quietly from explanation to output.

That is a dangerous shift, because output alone cannot carry legitimacy.

The most unsettling part is that many of these systems are good enough to avoid crisis. They are mostly accurate. Mostly fair. Mostly reliable. But mostly is not the same as accountable.

When harm is rare but unexplainable, when error is minimal but irreversible, trust degrades in a specific way. It does not explode. It drains.

People disengage emotionally while continuing to participate functionally.

At some point, society has to decide what kind of intelligence it is willing to live under.

One that is endlessly optimized and statistically justified, even when it cannot explain itself. Or one that remains bounded by the requirement to answer to humans in human terms, even if that limits efficiency.

This is not a technical choice. It is a moral one.

Restraint becomes the defining value.

Not every decision needs to be automated. Not every inference needs to be acted upon. Not every system that can decide should be allowed to decide finally.

Explanation is not a luxury feature. It is the foundation of legitimacy.

If there is a reckoning coming, it will not arrive with panic or collapse. It will arrive with a quieter realization that trust has thinned too far to be repaired casually.

The question is whether we notice that moment while choice still exists, or only after authority has fully migrated to systems that no longer feel the need to explain themselves.

Closing thought

The future will not be defined by how intelligent our systems become, but by how willing we are to limit them.

A system that cannot explain itself may still function.

But a society that accepts unexplainable authority cannot call itself in control.

That is the moment we should be paying attention to.