November 27, 2025

Predictive Cyber Ethics AI That Decides Who Might Commit Fraud

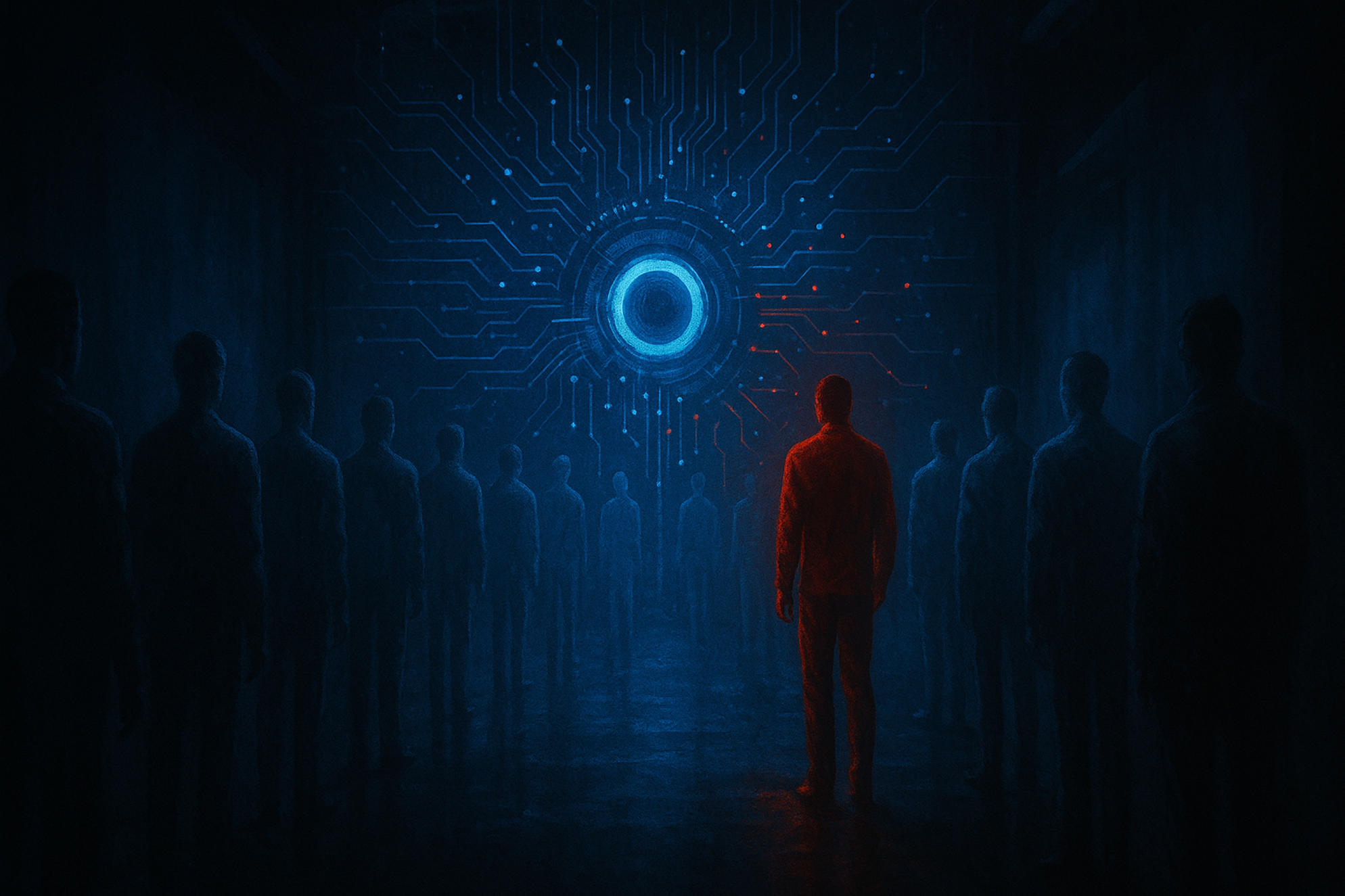

Cybersecurity was once reactive. Systems monitored threats, blocked attacks, and responded to breaches as they occurred. Today, cybersecurity is predictive. Platforms use machine learning models to evaluate user behavior and estimate the likelihood of fraudulent actions before they happen. This shift creates a powerful but troubling new form of digital governance known as predictive cyber ethics.

Predictive cyber ethics confronts a moral question that technology has never had to answer at scale. Should AI decide who might commit fraud based on patterns, probabilities, or behavioral markers, even if no wrongdoing has occurred? And what happens when platforms treat prediction as evidence?

Modern systems increasingly rely on risk scoring that evaluates users not for what they have done, but for what they might do. This transforms cybersecurity from a defensive shield into a gatekeeper that influences opportunity, access, and reputation. Even small irregularities can place someone into a high risk category, creating burdens that follow them across platforms.

The tension between protection and fairness has never been sharper.

The Evolution from Detection to Prediction

Traditional fraud detection was binary. A user either committed a suspicious act or did not. Predictive models transform this approach by incorporating behavioral micro signals, device patterns, emotional cues, and historical correlations. Instead of waiting for fraud, the system anticipates it.

Fraud prediction becomes attractive because it reduces financial losses, increases operational efficiency, and scales easily. The more data a system collects, the better it becomes at forecasting risk. Platforms justify this evolution by pointing to improved safety and reduced attack surfaces.

Yet prediction introduces ethical complexity. People are no longer judged by actions but by inferred potential.

How AI Learns to Predict Fraud

Predictive fraud systems draw from a growing body of behavioral analytics. They evaluate typing rhythm, login patterns, navigation speed, purchase timing, location discrepancies, and micro interactions that reflect trustworthiness. They compare these signals against historical data from known fraudulent behaviors.

From this constellation of signals, the system constructs a probability score. The score reflects the perceived risk that a user may attempt fraudulent activity. The model continuously adjusts itself as the user interacts with the platform, updating risk scores in real time.

The challenge is that normal human behavior also contains irregularities. A tired user, a rushed purchase, a borrowed device, or an international login due to travel can appear risky even when harmless. Predictive systems often misinterpret these variations because they are optimized for pattern consistency rather than human context.

The Birth of Preemptive Cyber Judgments

Once a system predicts risk, the platform must decide what to do with that prediction. Some platforms quietly increase monitoring. Others restrict certain features until the user completes additional verification. In more aggressive environments, accounts are suspended automatically.

This creates preemptive cyber judgments, where systems penalize people before any wrongdoing has occurred. These judgments feel invisible because they do not always surface as clear violations. Users experience confusing issues such as reduced access, blocked actions, or increased friction without explanation.

A user may be flagged not because of intent but because the system interpreted a behavioral signal incorrectly. The suspicion is invisible, but the consequences are real.

The Moral Dilemma of Predicting Harm

Predictive cyber ethics challenges foundational principles of fairness and justice. In physical systems, people are typically judged based on actions, not probabilities. Predictive models invert this principle by treating potential risk as actionable information.

There is a moral cost. People may be categorized as high risk due to circumstances beyond their control. Someone traveling across borders may trigger anomaly detectors. Someone using assistive technologies may navigate interfaces differently. Someone experiencing emotional stress may behave unpredictably.

Prediction begins to act like pre accusation.

Platforms justify this by pointing to statistical accuracy. Even if predictions are often correct, the ethical question remains. Should a model have the authority to limit a person’s digital life based on probability alone?

The Human Consequences of Being Labeled High Risk

Being labeled as a fraud risk affects more than platform access. It damages trust, reputation, and user confidence. People struggle to appeal decisions because predictive systems rarely show their reasoning.

A user may be forced into repeated identity verification processes. They may find their transactions delayed or rejected. They may lose access to key features because the system distrusts them. This can feel humiliating, especially when the person has done nothing wrong.

Predictive cyber ethics must account for the emotional and psychological burden that comes from automated suspicion. A person treated as a potential threat becomes hyper aware of every minor action. They fear triggering further scrutiny. They feel judged by a system that never explains why.

Errors Become Identity

Predictive systems work in probabilities, not certainties. A small misinterpretation can create cascading consequences. A single false signal may shape how the system evaluates every future action.

For example, a mistyped password may be interpreted as attempted intrusion. A slow response time may be treated as hesitation. A purchase of unusual value may be flagged as risk. Each of these signals becomes part of a cumulative risk score.

These errors form a digital identity. The model begins to trust its own predictions more than the user's history. This creates a feedback loop where a single false signal grows into a long term limitation.

Prediction gains permanence.

The Invisibility of Algorithmic Punishment

One of the greatest dangers of predictive fraud systems is that penalties often occur without transparency. Users do not see the risk score. They do not receive explanations. They cannot challenge the logic. Instead, they experience limitations as technical glitches or unexplained friction.

Invisible punishment is ethically dangerous because it removes agency and accountability. The system exerts control without the user understanding the reason. When people cannot identify the cause of their limitations, they cannot correct misunderstandings.

Predictive cybersecurity becomes a silent judge.

Bias Hidden Within Predictive Models

Predictive systems inherit bias from their training data. Groups with historically irregular digital access may appear riskier because their behavior patterns differ from majority norms. People with inconsistent connectivity, shared devices, or multilingual interaction patterns may be overflagged.

Cultural communication differences can also influence risk scores. A person’s writing style may resemble patterns associated with fraud in training datasets. Hyper cautious behavior may be misinterpreted as evasion.

The result is unequal suspicion across demographic groups. Some individuals face heightened scrutiny because the system is calibrated to detect patterns common in other populations.

Predictive cyber ethics must confront the risk that bias becomes automated distrust.

When Prediction Becomes a Self Fulfilling Cycle

Once a user enters a higher risk category, the system monitors them more closely. Increased monitoring often produces more flagged activities, even when benign. These additional flags reinforce the risk score, which leads to further monitoring.

Prediction becomes self fulfilling. The model interprets the user through a lens of suspicion. Neutral behavior begins to resemble patterns of risk simply because the system expects it to.

The cycle continues unless a human intervenes, yet intervention is rare at scale.

Preventive Protection or Preventive Punishment

Platforms argue that predictive systems protect users from fraud by catching issues early. This is true, but only partially. Predictive systems protect platforms first and users second. When the system restricts a user out of caution, the platform avoids liability.

The user absorbs the inconvenience, lost access, or reputation burden. This flips the ethical balance. Protection becomes punishment when users suffer consequences to reduce platform risk.

Predictive cyber ethics demands a reevaluation of where the balance should lie.

The Legal and Regulatory Void

Most regulatory frameworks do not address predictive cybersecurity. Traditional laws focus on actions, not probabilities. They define fraud as an event, not a predicted behavior. As a result, predictive systems operate without clear accountability.

Without regulation, platforms decide how prediction is used, how risk is scored, and how penalties are enforced. Users have few rights to appeal or review predictive decisions.

The regulatory vacuum creates fertile ground for ethical abuse.

How Wyrloop Evaluates Predictive Cyber Systems

Wyrloop assesses predictive cybersecurity platforms using criteria tied to transparency, fairness, proportionality, and user protection. We examine whether models provide explainability, whether penalties match the severity of risk, and whether users have meaningful ways to challenge predictive labels.

Systems that balance prediction with empathy, clarity, and user rights receive higher scores in our Predictive Ethics Integrity Index.

Conclusion

Predictive cyber ethics exposes the tension between safety and fairness in a digital world governed by algorithms. AI systems that estimate who might commit fraud carry immense power. They can protect users, but they can also restrict freedom, damage reputation, and undermine autonomy.

Prediction should guide investigation, not replace judgment. It should support security, not diminish dignity. As predictive systems evolve, their ethical foundations must evolve as well. People deserve transparency, proportionality, and the right to be treated as individuals, not as probability scores.

Fraud prediction must be intelligent, but it must also be humane.