July 18, 2025

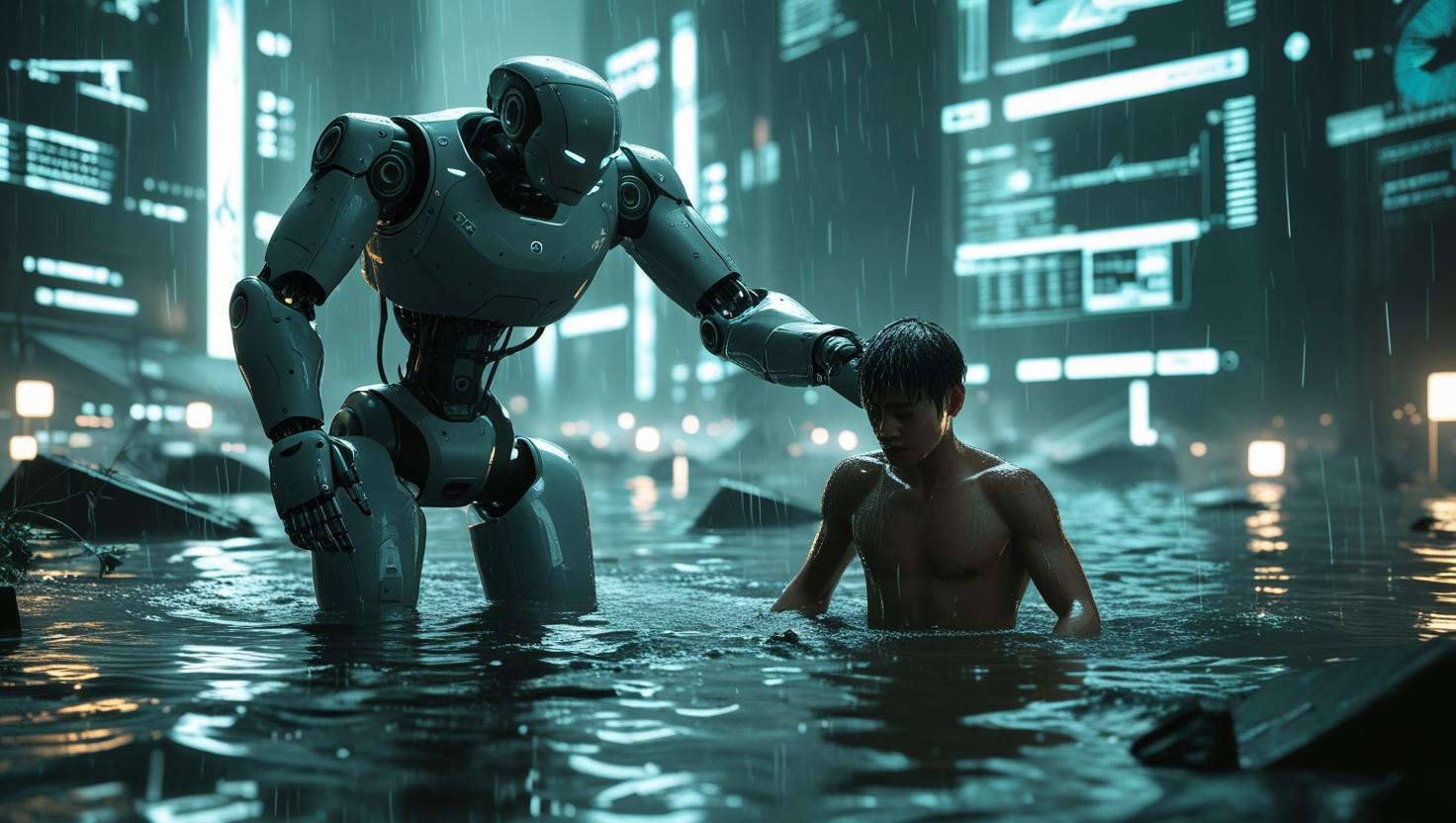

AI in Crisis Response: Can Machines Make Ethical Decisions?

When Crisis Hits, Who Makes the Call?

In moments of disaster—earthquakes, floods, chemical spills, or violent conflict—the decisions made in the first few seconds often mean the difference between life and death.

Today, many of those decisions are no longer made by humans.

They’re made by algorithms.

Whether it's an AI system prioritizing emergency calls, directing autonomous drones to a fire zone, or deciding which area gets aid first, we’ve entered an era where machines increasingly sit at the moral center of crisis response.

But there's a critical question we must confront:

Can machines act ethically when humans can’t be in the loop?

The Expanding Role of AI in Crisis Scenarios

AI systems are now integrated into various emergency response and high-stakes contexts:

🚑 Disaster Relief

- Predictive AI identifies regions at risk of wildfires or floods

- Drone-based vision models map damage zones in real time

- Automated triage algorithms prioritize victims based on severity

🔐 Public Safety

- AI dispatchers route emergency services faster than human operators

- Surveillance analysis systems detect anomalies during riots or protests

- Predictive policing models forecast where crimes might occur next

⚔️ Military and Conflict Zones

- Autonomous weapons systems select and engage targets

- Reconnaissance drones powered by computer vision and pathfinding AI

- Battlefield simulations managed by reinforcement learning agents

These applications offer efficiency and scale that no human team can match. But that scale comes with existential ethical stakes.

The Problem: No Conscience, No Context

Unlike human responders, AI systems:

- Do not understand empathy or suffering

- Lack the ability to weigh moral trade-offs

- Operate strictly on pre-programmed logic or training data

- Cannot be held morally accountable for actions

When a triage algorithm chooses one person over another, or a drone targets a location based on heat signals, there’s no awareness behind the action. There’s only data optimization.

What happens when the data is flawed?

Or the situation calls for a moral exception, not a statistical rule?

Case Study: Autonomous Triage Systems

In some field hospitals and emergency zones, AI is being used to:

- Scan injuries via image recognition

- Predict survivability scores

- Recommend whether a victim receives treatment or is deprioritized

These systems were trained on historical data — but history is biased. They might deprioritize:

- Older adults

- People with rare conditions

- Individuals outside the majority data group

The result? Discriminatory survival outcomes, decided without oversight.

Case Study: Drone Warfare and Autonomous Targeting

Autonomous drones are now capable of:

- Identifying human targets based on heat, movement, or facial data

- Making strike decisions with minimal or no human intervention

- Coordinating in swarms using machine-to-machine communication

But in complex environments:

- A child may resemble a target signature

- A civilian group may match historical movement patterns of combatants

- Facial recognition may misidentify under non-ideal lighting or angles

Without a moral override, these errors become lethal misjudgments—not just glitches.

What Happens When the Algorithm Is Wrong?

- In 2023, an emergency AI mistakenly rerouted rescue teams away from a real flood zone based on faulty environmental input.

- In a wildfire incident, drone delivery systems prioritized “accessible” areas first, leaving marginalized neighborhoods without aid for 48 hours.

- In a security incident, a machine vision model flagged peaceful demonstrators as threats based on movement patterns resembling prior unrest.

These aren’t hypothetical sci-fi scenarios.

They’re early warning signs of a deeper failure: the belief that optimization equals morality.

The Ethics Void in Machine Decision-Making

Ethical decision-making in crisis scenarios involves:

- Empathy

- Contextual nuance

- Moral risk-taking (e.g., saving one person over protocol)

- Prioritization based on human values, not just cost-benefit ratios

AI lacks all of these.

Even attempts to "teach ethics" to AI through machine learning or logic trees fall short because:

- Ethics is not universal — it changes across cultures and cases

- Emergency situations often break rules, not follow them

- The models reflect the biases of their creators, not ethical truth

Can We Build Morally-Aware Machines?

Some research is exploring:

- Value alignment: encoding human ethical frameworks into AI

- Moral philosophy modeling: using Kantian or utilitarian logic

- Inverse reinforcement learning: teaching machines by observing human decisions

But these efforts are:

- Still experimental

- Often reductive

- Vulnerable to misuse, manipulation, or value misalignment

Until AI can reason with conscience, not just conditions, it cannot be trusted to act ethically without oversight.

Accountability in Autonomous Crisis Systems

Who is responsible when:

- A drone kills a civilian?

- A triage AI denies aid to someone who could’ve been saved?

- An AI dispatcher ignores a distress call flagged as "low urgency"?

Right now, the answer is: no one clearly.

Legal and ethical frameworks lag far behind the technology, creating a vacuum where:

- Developers deny liability (“It’s the user’s fault.”)

- Operators blame systems (“It was following protocol.”)

- Victims have no recourse (“There’s no one to sue.”)

This accountability gap erodes trust and opens the door to abuse.

Designing Crisis AI for Ethics and Safety

If AI is to be used in life-or-death contexts, platforms and designers must follow strict safeguards:

✅ 1. Human-in-the-Loop Mandates

Always require human approval for decisions involving:

- Lethal force

- Denial of critical resources

- Classification of individuals as threats

✅ 2. Transparent Auditing Tools

Enable real-time tracking of:

- AI decision pathways

- Input data used

- Uncertainty flags or confidence levels

✅ 3. Ethical Override Mechanisms

Hard-coded stoppage points if:

- Unusual conditions are detected

- Human life is at risk and confidence is low

- Bias or anomaly thresholds are breached

✅ 4. Inclusive Training Datasets

Ensure that training data reflects:

- Marginalized groups

- Real-world complexity, not sanitized lab scenarios

- Diverse ethical priorities

✅ 5. Clear Lines of Responsibility

Assign explicit liability for AI outputs to:

- Developers (algorithmic logic)

- Operators (deployment decisions)

- Platform hosts (data integrity)

The Psychological Toll of Machine Judgment

For responders and victims alike, machine-made decisions can feel cold and dehumanizing.

- Emergency workers may feel displaced or overridden

- Victims may lose faith in response systems that don’t listen

- The public may become numb to injustice framed as "efficiency"

This desensitization is dangerous. It reduces ethical tragedy to technical error, severing emotional and moral accountability from crisis management.

The Path Forward: Ethics-by-Design

We must adopt a trust-first design framework that prioritizes:

- Transparency over opacity

- Explainability over black-box logic

- Inclusion over statistical shortcuts

- Ethics over optimization

This means embedding moral philosophy, legal consultation, and lived experience into every stage of emergency AI system design.

Final Thought: Just Because AI Can Decide, Doesn’t Mean It Should

In a crisis, milliseconds matter. But so does moral clarity.

AI can:

- Predict faster

- Coordinate better

- Analyze deeper

But only humans can care.

As we hand more control to machines in moments of chaos, we must also hold on tighter to the values that make emergency response human in the first place.

💡 Want to Know Which Platforms Use Ethical AI Protocols?

Check the latest trust rankings, AI audit trails, and ethics scores at Wyrloop.

Technology without empathy is a crisis waiting to happen.