August 27, 2025

AI Content Collisions: When Generators Flood Platforms With Indistinguishable Media

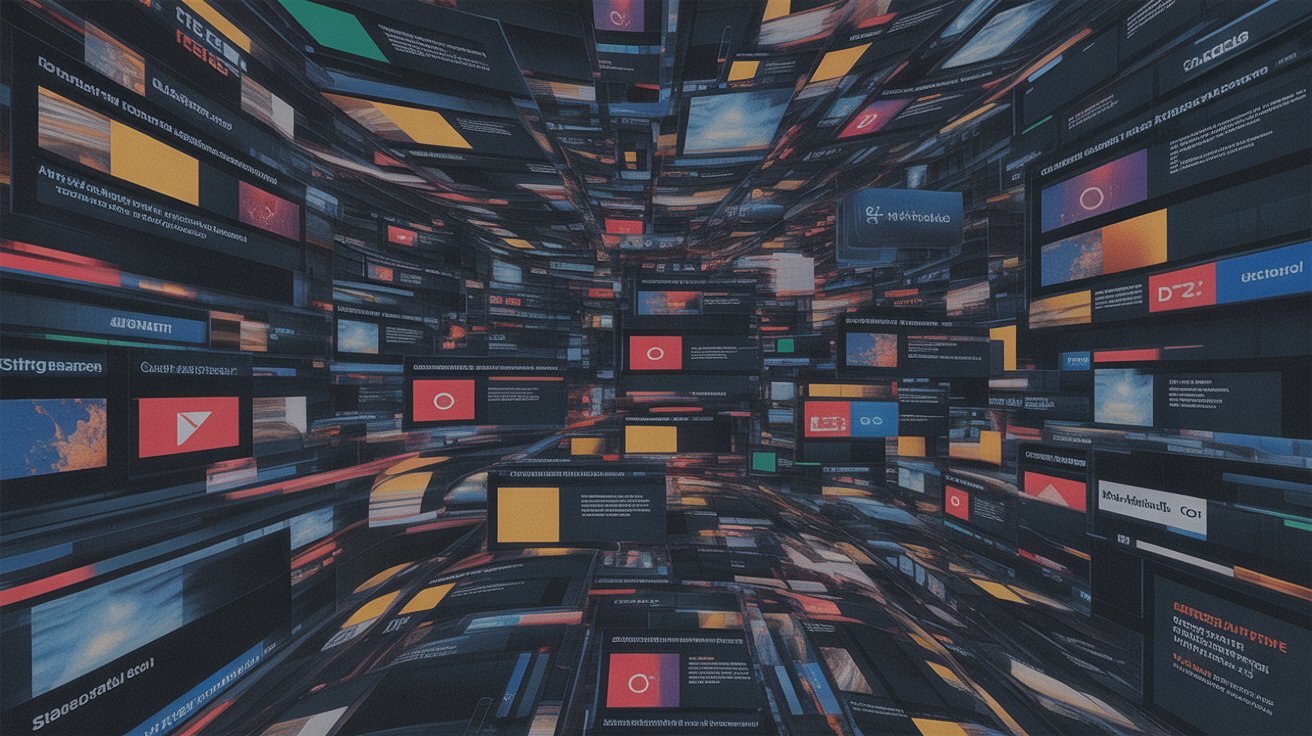

The internet has always been crowded. Blogs, reviews, memes, and videos pour in by the second. But in 2025, the noise has reached a new threshold. The rise of advanced AI generators has created a wave of indistinguishable content. Platforms now face "content collisions," moments when multiple AI systems produce near-identical media at scale. What emerges is an ecosystem where originality blurs, authenticity weakens, and user trust begins to fracture.

The Mechanism of Content Collisions

AI models are trained on overlapping datasets, tuned to mimic similar styles, and optimized for virality. When multiple tools generate content about the same trending topic, the results converge. Headlines echo one another. Product reviews feel copy-pasted. Images differ only in superficial filters. The illusion of diversity hides an undercurrent of repetition.

Key causes include:

- Shared training data: Models ingest the same public datasets, creating similar outputs.

- Optimization bias: Algorithms favor the most "engaging" phrases, leading to converging language.

- Market saturation: Dozens of tools produce at industrial scale, flooding feeds with indistinct variations.

Platforms Struggling to Cope

For platforms, these collisions are more than clutter. They distort signals of trust:

- Search engines drown in AI-written posts optimized for keywords.

- Review sites face floods of near-identical product feedback, impossible to separate from genuine voices.

- Social feeds overflow with replicated AI memes that erase organic conversations.

The outcome is not just noise, but erosion of meaning. When everything looks the same, users cannot tell what to value.

The Disappearance of Originality

Traditionally, the web thrived on human difference. Regional slang, unique experiences, and personal storytelling gave content texture. AI collisions flatten these nuances. The same phrase might describe hundreds of products. The same image composition may appear across dozens of ads. The more AI converges, the less individuality remains.

This raises pressing cultural questions:

- Does originality still matter when efficiency and volume dominate?

- Can users build trust when every source echoes another?

- How will marginalized voices stand out in oceans of automated sameness?

Trust at Risk

The core casualty of AI content collisions is trust. If every review sounds algorithmic, why believe any? If every news update reads like a polished script, how do users know which version is authentic? The collapse of distinctiveness undermines credibility itself.

Potential risks include:

- Review fraud scaling faster than detection tools.

- Synthetic media loops, where AI learns from AI, further diluting originality.

- Deep manipulation, where malicious actors hide misinformation in a sea of near-identical content.

The Moderation Dilemma

Moderators face a paradox. If they filter too aggressively, they risk censoring legitimate voices that resemble AI. If they are too lenient, platforms drown in indistinguishable content. Automated filters struggle, since AI content often passes as "human." Manual review is impossible at scale. The line between authenticity and automation blurs until it vanishes.

Possible Solutions

The battle against collisions will not be easy, but paths exist:

- Provenance tracking: Embedding metadata or cryptographic watermarks in AI-generated media.

- Content authenticity layers: Allowing users to verify whether content was machine- or human-produced.

- Platform responsibility: Enforcing caps on automated publishing to prevent flood dynamics.

- Cultural adaptation: Shifting value toward communities that emphasize verified human storytelling.

A Future of Overlapping Signals

The next phase of the internet may be defined less by originality and more by discernment. Users will not just consume content, but actively question: Who made this? Why does it look familiar? What makes it worth trusting? Platforms will need to surface answers, or risk losing their communities to fatigue and indifference.

Conclusion: Meaning Over Volume

AI content collisions highlight a central tension of the digital age. Machines can generate infinite words, images, and videos, but meaning cannot be automated. If platforms allow indistinguishable media to dominate, the internet risks becoming a hall of mirrors, where every reflection looks the same and trust becomes impossible.

The challenge of 2025 is not how much content we can create, but how much of it remains meaningful.